Archive

NetApp at Cisco Live : Another look into the future

It’s no secret that Cisco has been investing a LOT of resources into cloud, and from those investments, we’ve recently seen them release a corresponding amount of fascinating new technology that I think will change the IT landscape in some really big ways. For that reason, I think this year’s Cisco Live in Melbourne is going to have a lot of relevance to a broad cross section of the IT community, from application developers, all the way down to storage guys like us.

It’s no secret that Cisco has been investing a LOT of resources into cloud, and from those investments, we’ve recently seen them release a corresponding amount of fascinating new technology that I think will change the IT landscape in some really big ways. For that reason, I think this year’s Cisco Live in Melbourne is going to have a lot of relevance to a broad cross section of the IT community, from application developers, all the way down to storage guys like us.

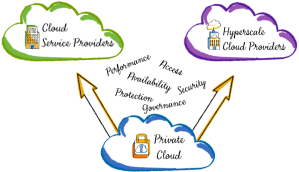

For that reason, I’m really pleased to be presenting a Cisco partner case study along with Anuj Aggarwal who is the Technical account manager on the Cisco Alliance team for NetApp. Most of you wont have met Anuj, but if you’ve got the time, you should take the time to get to know him while he’s down here. Anuj knows more about network security, and the joint work NetApp and Cisco has been doing on hybrid cloud than anyone else I know, and this makes him a very interesting person to spend some time with, especially if you need to know more about the future of networking and storage in an increasingly cloudy IT environment.

I’m excited by this year’s presentation, because it expands on, and in many ways completes a lot of the work Cisco and NetApp have been doing since NetApp’s first appearance at Cisco Live in Melbourne in 2010 as a platinum sponsor, where we presented to a select few on SMT, or more formally Secure Multi-Tenancy.

Why private cloud needs an undo-key

A business mentor of mine once told me there are only four rational reasons why a company invests its capital, and those reasons are to improve revenue, decrease costs, reduce risk or improve agility. I asked if agility really deserved its own category, and he answered with a quote from Charles Darwin: –

“It is not the strongest of the species that survives, nor the most intelligent that survives. It is the one that is the most adaptable to change”

He continued that improving revenue is actually almost arguable, because it’s the one thing over which the company has the least control, and that in a fast changing business environment, you’d be better off investing in agility so you can take advantage of uncertainty.

I was reminded of this recently because it’s been a little over ten years since Nick Carr wrote an article in the Harvard Business Review stating the IT doesn’t matter. I opened with this during NetApp’s recent Elevate conferences in Adelaide and Perth, and pointed out that IT that doesn’t improve top line revenue or a company’s agility is a recipe for a focus on nothing more than cost and risk reductions. I was surprised that my comment still provoked a pretty defensive result in some IT professionals.

As I talked about how IT infrastructure teams could learn a lot from agile software development methodologies, and that a datacenter built on software defined infrastructure would allow this, it struck me what was causing this defensive posturing. Risk management was THE key issue that had to be addressed before any of this could happen. To be sure, costs are important, but without a way of dealing with risk effectively, none of this agile, software defined, cloud nirvana was ever going to happen, or certainly not within the timeframes anyone outside of IT was going to tolerate.

This insight was particularly relevant to me because in IT, vendors talk a lot about private cloud to our customers. We talk about accelerating journeys, we talk about how it’s your cloud, we talk about the benefits and we publish case studies. At the same time our product organizations spend increasingly large amounts of their development time and resources on delivering technology to create service catalogs, analytics capabilities and automation and self-service frameworks.

Internally, and between ourselves in the breaks between presentations at events and conferences, many of us wonder why, despite the clear business benefits and available technology, the adoption rate is much slower than we would have expected, and many companies business units are leapfrogging their IT departments internal cloud developments to go directly to large public cloud offerings.

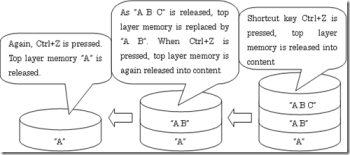

It wasn’t until I got home and I heard my wife say “That’s awesome, they’re teaching the concept of the undo-key” that I had my real epiphany. What she was talking about was a kickstarter project called Robot Turtles, a board game created by Dan Shapiro of Google that teaches primary school kids the basics of programming. While the concept is awesome, it struck me that the ability to easily undo a mistake so fundamental to Agile software development, that it is one of the first concepts you would teach. It was also the reason why infrastructure agility was something that was talked about far more than it was done. People can’t take the same risks with their data infrastructure that you can with software development, or a word processing document, and the reason is that for almost all of us, there is no genuinely effective equivalent of Control-Z for our infrastructure.

Imagine that, in order to roll back a mistake in a word processing document, that first you had to

- Open up a brand new document

- Copy all the text from the first document and past it into the second document, one paragraph at a time

- Run an macro that read the formatting on the first document

- Paste the results of that macro into the second document

Then if you made a mistake that you had to

- Delete your entire paragraph that had the mistake

- Copy the paragraph from the second document

- Find the portion of the script that had the formatting for the document you just copied back

- Run that portion of the script on the original document, and hope that it doesn’t affect any of the other paragraphs or muck up the indexing or cross referencing

Furthermore, imagine that your copy was usually twelve hours old, and you could only recover your data after you’d received permission via a formal change request that had to be approved by three managers who checked them into the change control systems, then arranged for them to be sent back, buried in soft peat for three years and then finally recycled as firelighters.

Clearly, nobody would use any software program that had those limitations, and yet that’s exactly the kind of thing infrastructure professionals have to deal with on a daily basis. It’s no wonder that their perception of risk management and that of the rest of the business are so different.

Agile methodologies deals with risk in a completely different way, it requires that you build your progress on small iterative steps, and that at the end of each step you gain some insight, which you then turn into action. Continuous testing, and continuous deployment significantly reduce the risks of major project failures previously associated with waterfall methodologies. Even with an entire data-center built on software defined infrastructure, without an easy way of testing new infrastructure builds, and fixing and correcting mistakes early, infrastructure operations will never be able to fully support the kinds of agility the business increasingly demands from IT. So long as internal IT lacks an effective undo-key, they will be stuck in the world of waterfall methodologies, and a cost effective, agile private cloud built on software defined principals will remain a future vision instead of a present day reality.

The nice thing from my perspective is that NetApp uniquely provides a well proven set of tools that provides the fine grained undo that works from a single document on a home drive, all the way up to a petabyte scale data-center. We provide a Control-Z that lets you innovate safely, and realize the benefits of private cloud on technology that is already in production in thousands of data centers.

Future blog posts will concentrate on specific technologies like Snapmirror, SnapCreator, and NetApp Shift and how they create and enable a Universal Data Platform that can be used to eliminate the risk that stands between where virtualization stands today, and a truly agile, hybrid cloud tomorrow.

Storage– Software Defined Since 1982

if you take that 3 step process for creating a “Software Defined” infrastructure that I outlined in my previous post, you could reasonably say that storage has been “software defined” since about 1982 (arguably as early as 1958 when the first disk drive made its appearance)

e.g.

- Step 1 – identify and then formally define a set of common functions or primitives performed by existing infrastructure that are optimally run in purpose built devices (e.g. hardware filled with interfaces and ASICs) – This becomes the "Data Plane".

- From a data storage perspective I have broken down what I see as the common storage primitives into four main categories. I’ll probably use these categories as a tool for functional comparisons of various Software Defined Storage implementations going into the future.

placement managment – e.g. given an logical address and some data by a requestor, write that data to an underlying storage medium so that it can be subsequently retrieved using that address without the requestor needing to be aware of the physical characteristics of that underlying storage medium

access managment – e.g. given an address by a requestor, read data from an underlying storage medium and make it available to the requestor. Additionally in the case where multiple requestors may make simultaneous requests to place or access the same data, provide a mechanism to arbitrate that access.

copy management – e.g. given a set of source addresses and a range of target addresses, copy the data from the source to the target on behalf of the requestor

persistence management – in most storage systems this is an implied function, though increasingly with the rise of protocols such as CDMI, and XAM, data persistence SLOs are being explicitly defined at placement time. In most cases however, data must be stored until the device itself fails, and the device is generally expected to have a lifetime of multiple years.

- Step 2 – Create a protocol that manages those functions

- The great thing about standards is there’s so many of them … and the storage industry just LOVES forming standards bodies to create new protocols to manage the functions I described above. Many of them have been around for a while: SCSI was standardised in 1982, NFS in 1989, SMB in 1992 (kind of), OSD in 2004 and in more recent times we have seen implementations like XAM in 2010, and most recently CDMI which became an ISO standard in 2012.

Some of us get religious about these standards and which one should be used for what purposes, what I find interesting is that they all seem to be converging around a common set of functionality, so it’s possible that we will eventually see one storage protocol to rule them all, but I doubt it will happen any time soon. In the near term, whether we need to create another new protocol is debatable, but as of this moment I’m pretty impressed with the work being done at SNIA with CDMI, not as a “new replacement” but as something which leverages the work that’s already been done with the other protocols and fills in their gaps, but I’m getting WAY ahead of myself here.

- Step 3 – Create a standards compliant controller that runs on general purpose hardware (e.g. an intel server, virtualised or otherwise) that takes higher order service requests from applications and translates those into the primitives codified in step 1, over the protocol devised in step 2. – This becomes the "Control Plane"

- Well if you accept that the existing storage protocol standards are functionally equivalent to the OpenFlow Protocol in Software defined Networking, then pretty much any modern operating system could function as a controller. Also any modern hypervisor also acts as a controllers, and any storage array which uses SCSI protocols to talk to the disks at the back end also acts as a controller, and in my view this is an accurate description.

- Each of these constructs acts a a standards compliant controller in a software defined storage infrastructure, with multiple levels of encapsulation with consequent challenges that there is significant functional control overlap between these controllers. Over the next few posts I’ll go through what this encapsulation looks like, where the challenges and opportunities are in each level, the design choices we face, and build that up so we can see how close we are to achieving something that matches some of they hype around software defined storage.

It’s also worth noting that until I’ve reached my conclusion, much of what I’ve written and will write will not neatly match up with the analyst definitions of software defined storage. If you bear with me we’ll get there, and probably then some. My hope is that if you follow this journey you’ll be in a better position to take advantage of something that I’ll be referring to as “SLO Defined” storage (simply because I really don’t think that “Software Defined” is particularly useful as a label)

If you want to jump there now and get the analysts views, check out what IDC and Gartner have to say. For example IDC’s definitions of software defined storage from http://www.idc.com/getdoc.jsp?containerId=prUS24068713 says in part

software-based storage stacks should offer a full suite of storage services and federation between the underlying persistent data placement resources to enable data mobility of its tenants between these resources

The Gartner definition which isn’t public, takes a slightly different approach and can be found in their document “2013 Planning Guide: Data Center, Infrastructure, Operations, Private Cloud and Desktop Transformation” where it talks about higher level functionality including the ability for upper level applications to define what storage objects they need with pre-defned SLO’s and then have that automatically provisioned to them. (or at least that is my take after a quick read of the document).

IMHO, both of these definitions have merit, and both go way beyond merely running array software in a VSA, or bundling software management functions into a hypervisor, or pretty much anything else that seems to pass for Software Defined Storage today, which is why I think it’s worth writing about …. In …. Painful …. Detail ![]()

As always, Comments and Criticisms are welcome.

John

Is VSA the future of Software Defined Storage? (In reply to DuncanYB)

I was reading a blog post by Duncan Epping here http://www.yellow-bricks.com/2013/04/24/re-is-vsa-the-future-of-software-defined-storage-openi/ around VSA’s and software defined storage, and put in one of my usually overly long replies when I thought it might make a reasonable blog post here, because it outlines a number of my key thoughts on this which I was planning on writing about later on. If you get the chance, read Duncan’s post as theres some good stuff in the main blog as well as some interesting comments.

The following was my reply with some typo cleanup …

IMHO VSA’s will be an important part of the software defined storage (SDS) landscape but by no means are they the complete story. What is lacking in SDS is the equivalent of flow-tables in switches. If you go with the whole “separate the control plane from the data plane” definition of software defined anything, then you could reasonably argue that this is exactly what things like the VERITAS volume manager and file system did way back in the 80’s. For a whole stack of good reasons people chose to bifurcate that responsibility of managing that functionality increasingly into the storage and application layers, leaving those product with increasingly niche roles. The advent of SDS might change swing that pendulum back towards 80’s style architecture for a while, but people tend towards vertically integrated solutions when the complexities of managing and integrating solutions themselves becomes economically unviable, and designing a reliable storage solution with high performance at large scale that caters for a large variety or workload types is very very hard to do well.

Going back to the lack of a storage equivalent of flow-tables, the trouble with SDS is that storage requirements are much less homogenous than switching requirements and much harder to bring down to a small number of discrete functions that can be acclerated in hardware. I think that over time these will become more obvious, the first and most obvious of which is copy offload/management, but these requirements will probably evolve over time.

Rather than focus on building an industry/standards defined theoretical model, and trying to wedge/judge all the designs by that model, I think we’d be better served by loosening up the vertical integration of storage systems and then finding a variety of creative ways of leveraging large amounts of cheap CPU/Memory/Cache/Disk sitting in the virtualisation layer. VSA’s are a fairly coarse grained way of achieving this, but many of them don’t elegantly leverage tightly/vertically integrated infrastructure to accelerate or drive efficiencies where that is appropriate.

For example, there are ways of using the hypervisor resources as a “data plane” and leaving the control plane in the centralised array, such as NetApp FlashAccel . This is kind of counter-intuitive to the existing “control-plane lives in the hypervisor” model as the cache is seen as an extension of the hardware array rather than the array being seen as an extension of the hypervisor. To be fair the model isn’t that pure, as control portions are distributed between the array and they hypervisor. My point is that the boundaries become a lot fuzzier, and will be functionality will be divided and combined in a variety of interesting ways, and so long as storage is asked to perform so many different tasks, I think that’s a good thing.

While I love VSAs as a conceptually neat little package of functionality with tightly defined boundaries, (The DataONTAP VSA’s in particular, especially if you’re aware of their roadmap) I think that data and storage management will for the foreseeable future be a shared responsibility between applications, hypervisors, operating systems and arrays. The biggest challenge we face is co-ordinating these responsibilities and choosing the most efficient and automatable ways of combining them to give customers what they need without needlessly locking them into inflexible architecture choices.

Regards

John Martin

Software Defined Networking – The Next Frontier

The following part of the post came from content I wrote for Evolve a newsletter we publish out of ANZ. It’s a a little long and technical for an executive focused newsletter, which is partly why it gets a little bit rushed in the end. What I’d like to do is to expand a little more on what I believe are the choices that can be made when separating the control and data planes in a software defined storage architecture, where the industry, and in-particular NetApp is today, where things are likely to go, and most importantly how to get value from this architectural shift.

Introduction

CIOs face the constant challenge of turning rapid technological developments into business advantage. If this was not difficult enough, there are often times when multiple technologies are simultaneously released into the market, changing the IT landscape. The datacentre is currently on the cusp of such a revolution.

As it was for workforce mobility and cloud computing, it is the network that will be at the centre of these transformations. A network connects resources to intelligence and allows us to redefine what a datacenter is, and how we consume its properties.

It isn’t just incredible speed and massive bandwidth that is causing this transformation, but the fruition of an idea that’s been in development for the last decade, and that idea is Software-Defined Networking or SDN.

Software Defined Networking

This disruptive trend in the networking industry rediscovers the old idea of separating the control and data planes in network equipment. In other words, SDN liberates the higher-level network management functions from their ties to individual boxes and instead offers the vision of a “network operating system”. This allows networked applications to provision and control their networking needs using high-level open programming interfaces provided by an SDN “network controller”. The promise of this approach has meant that in a few short years, Software-Defined Networking has turned from a simple idea meant to enable new academic networking research into a potentially industry-changing technology trend.

The reason for this is that the network virtualization technology that is part of SDN is the missing piece that completes the vision of a software-defined datacenter, where compute, network and storage resources are elastic and dynamically adaptable. This network virtualization not only completes this vision, it raises the bar on how the different virtualized components integrate and interact in new, direct and more dynamic ways. This changes what IT will expect from their storage infrastructure.

SDN and the implications for Storage

Infrastructure managers who see the promise of a software defined datacentre are beginning to see storage as an important part of the infrastructure they desire to manage within the context of an SDN. However, this is only possible if the storage infrastructure itself can be separated between software that controls and manages data, and the infrastructure that stores, copies and retrieves that data. In short, storage needs to have its own control and data planes, working seamlessly as an extension of the SDN infrastructure that will be the core of the next generation datacentre.

Part of the reason for wanting to separate the control plane and liberate the storage control software from the hardware is that software defined storage allows offloading the computationally heavy aspects of storage management related functions like RDMA protocol handling, advanced data lifecycle management, caching and compression. The availability of large amounts of CPU power within private and public clouds opens all kinds of possibilities to both network and storage management that were simply not feasible before.

With more intelligence built into the Control-Plane, storage architects are now able to take full advantage of the other two major changes in the Data-Plane. The first, and perhaps the most interesting is the increasing affordability of solid state memory such as Flash and post-Flash technology such as PCM and STT-RAM.

Optimising Performance

Phase Change Memory (PCM) and Spin-transfer torque random-access memory (STT-RAM), have the access speeds and byte addressable characteristics of the Dynamic RAM (DRAM) used in servers today, with the added and transformational benefit of the solid state persistence of Flash. These technologies are significantly more expensive than Flash is today, but the predictions are these technologies will surpass even the cheapest forms of Flash memory within five or six years. Regardless of which technology wins, the trends are clear; within a few years the majority of a server’s storage performance requirements will be served from some form of solid state storage within the server itself. When this is combined with new network technology and software like SAP HANA, it has major implications for storage design and implementation. Imagine how your infrastructure would change if every server had terabytes of super-fast solid state memory connected together via ultra-low latency intelligent networking. The fact is that many of the reasons we implement shared storage for mission critical applications today, would simply disappear.

Optimising Capacity

The second major change is the demand to store and process massive amounts of data that increases as we are able extract more value from that data through Big Data analysis. This coincides with a dramatic reduction in the cost of storing that data. Very high density SATA drives with capacities in excess of 10TB per disk are coming, but in order to surpass some hard quantum-physics level limitations they will use new storage techniques such as shingled writes and will be built optimally to store, but never overwrite or erase data. This means the storage characteristics at the Data-Plane will be fundamentally different from those we are familiar with today. Furthermore, even with these improvements in the costs and density of magnetic disk, the economics of power consumption and datacentre real-estate means that tape is becoming attractive again for long term archival storage. Finally, the economies of scale that large cloud providers have and the availability of massive computing power they are able to place in close proximity to that data means that those cloud providers will have a compelling value proposition for storing a large proportion of an organisation’s cold data.

Regardless of where and how this data is stored, the challenges of securing and finding that data, and managing the lifecycles of this massive amount of information means traditional methods of using files, folders and directories simply won’t be viable in the long term. New access and management techniques built on-top of object based access to data such as Amazon’s S3 and the open standards based CDMI interfaces will be the management and data access protocols of choice.

Conclusion

In the end the only way to effectively combine the speed and performance of solid-state storage with the scale and price advantages of capacity optimised storage is by using a software defined storage infrastructure. It is the intelligence of the separated Control-Plane powered by commodity CPU that allows infrastructure managers and datacentre architects to take advantage of these two massive trends.

While this all talks about what will happen in the future, unlike other vendors who are only just beginning to talk about building a software defined storage infrastructure, NetApp has been planning for this future for many years now.

- Clustered Data-ONTAP was built on the principal of separating the Data-Plane and the Control-Plane and is ready to take advantage of the trends in software defined networking as they evolve and are deployed into datacentres over the next few years

- NetApp’s fully supported ONTAP-Edge software runs in a virtual machine, allowing the full power of ONTAP’s advanced data management functionality on commodity DAS, and NetApp’s V-Series controllers performs the same function at extreme scale for the largest and most mission critical environments

- NetApp has released at no cost to the customer Flash-Accel technology that allows commodity SSD’s and 3rd Party PCI based Flash cards to act as an extension of our storage cache for virtualized environments. This extends the work we have done in our separation of control and data-planes for our existing customers who have not yet moved to Clustered Data ONTAP

- NetApp has partnered with Amazon to provide private storage for AWS which allows the massive on-demand compute power to be coupled with NetApp’s storage in Amazon’s datacenters

- NetApp already provides open standards based advanced programming and automation interfaces through offerings such as NetApp Workflow Automater, the Cloud Data Management Interface, SMI-S, and continues to lead the industry in providing programmable software defined storage. These aren’t just technology tick box items, but technology that drives significant competitive advantages such to companies like ING DIRECT’s “Bank in a Box”.

These are just a few of the things we’ve already done, the foundations have already been set and what NetApp will be building and bringing to the market over the next few years will truly redefine what storage is inside the datacenter, and the value it can bring to IT and the organisations it serves