Archive

NetApp at Cisco Live : Another look into the future

It’s no secret that Cisco has been investing a LOT of resources into cloud, and from those investments, we’ve recently seen them release a corresponding amount of fascinating new technology that I think will change the IT landscape in some really big ways. For that reason, I think this year’s Cisco Live in Melbourne is going to have a lot of relevance to a broad cross section of the IT community, from application developers, all the way down to storage guys like us.

It’s no secret that Cisco has been investing a LOT of resources into cloud, and from those investments, we’ve recently seen them release a corresponding amount of fascinating new technology that I think will change the IT landscape in some really big ways. For that reason, I think this year’s Cisco Live in Melbourne is going to have a lot of relevance to a broad cross section of the IT community, from application developers, all the way down to storage guys like us.

For that reason, I’m really pleased to be presenting a Cisco partner case study along with Anuj Aggarwal who is the Technical account manager on the Cisco Alliance team for NetApp. Most of you wont have met Anuj, but if you’ve got the time, you should take the time to get to know him while he’s down here. Anuj knows more about network security, and the joint work NetApp and Cisco has been doing on hybrid cloud than anyone else I know, and this makes him a very interesting person to spend some time with, especially if you need to know more about the future of networking and storage in an increasingly cloudy IT environment.

I’m excited by this year’s presentation, because it expands on, and in many ways completes a lot of the work Cisco and NetApp have been doing since NetApp’s first appearance at Cisco Live in Melbourne in 2010 as a platinum sponsor, where we presented to a select few on SMT, or more formally Secure Multi-Tenancy.

Why private cloud needs an undo-key

A business mentor of mine once told me there are only four rational reasons why a company invests its capital, and those reasons are to improve revenue, decrease costs, reduce risk or improve agility. I asked if agility really deserved its own category, and he answered with a quote from Charles Darwin: –

“It is not the strongest of the species that survives, nor the most intelligent that survives. It is the one that is the most adaptable to change”

He continued that improving revenue is actually almost arguable, because it’s the one thing over which the company has the least control, and that in a fast changing business environment, you’d be better off investing in agility so you can take advantage of uncertainty.

I was reminded of this recently because it’s been a little over ten years since Nick Carr wrote an article in the Harvard Business Review stating the IT doesn’t matter. I opened with this during NetApp’s recent Elevate conferences in Adelaide and Perth, and pointed out that IT that doesn’t improve top line revenue or a company’s agility is a recipe for a focus on nothing more than cost and risk reductions. I was surprised that my comment still provoked a pretty defensive result in some IT professionals.

As I talked about how IT infrastructure teams could learn a lot from agile software development methodologies, and that a datacenter built on software defined infrastructure would allow this, it struck me what was causing this defensive posturing. Risk management was THE key issue that had to be addressed before any of this could happen. To be sure, costs are important, but without a way of dealing with risk effectively, none of this agile, software defined, cloud nirvana was ever going to happen, or certainly not within the timeframes anyone outside of IT was going to tolerate.

This insight was particularly relevant to me because in IT, vendors talk a lot about private cloud to our customers. We talk about accelerating journeys, we talk about how it’s your cloud, we talk about the benefits and we publish case studies. At the same time our product organizations spend increasingly large amounts of their development time and resources on delivering technology to create service catalogs, analytics capabilities and automation and self-service frameworks.

Internally, and between ourselves in the breaks between presentations at events and conferences, many of us wonder why, despite the clear business benefits and available technology, the adoption rate is much slower than we would have expected, and many companies business units are leapfrogging their IT departments internal cloud developments to go directly to large public cloud offerings.

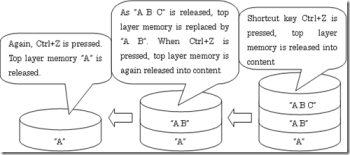

It wasn’t until I got home and I heard my wife say “That’s awesome, they’re teaching the concept of the undo-key” that I had my real epiphany. What she was talking about was a kickstarter project called Robot Turtles, a board game created by Dan Shapiro of Google that teaches primary school kids the basics of programming. While the concept is awesome, it struck me that the ability to easily undo a mistake so fundamental to Agile software development, that it is one of the first concepts you would teach. It was also the reason why infrastructure agility was something that was talked about far more than it was done. People can’t take the same risks with their data infrastructure that you can with software development, or a word processing document, and the reason is that for almost all of us, there is no genuinely effective equivalent of Control-Z for our infrastructure.

Imagine that, in order to roll back a mistake in a word processing document, that first you had to

- Open up a brand new document

- Copy all the text from the first document and past it into the second document, one paragraph at a time

- Run an macro that read the formatting on the first document

- Paste the results of that macro into the second document

Then if you made a mistake that you had to

- Delete your entire paragraph that had the mistake

- Copy the paragraph from the second document

- Find the portion of the script that had the formatting for the document you just copied back

- Run that portion of the script on the original document, and hope that it doesn’t affect any of the other paragraphs or muck up the indexing or cross referencing

Furthermore, imagine that your copy was usually twelve hours old, and you could only recover your data after you’d received permission via a formal change request that had to be approved by three managers who checked them into the change control systems, then arranged for them to be sent back, buried in soft peat for three years and then finally recycled as firelighters.

Clearly, nobody would use any software program that had those limitations, and yet that’s exactly the kind of thing infrastructure professionals have to deal with on a daily basis. It’s no wonder that their perception of risk management and that of the rest of the business are so different.

Agile methodologies deals with risk in a completely different way, it requires that you build your progress on small iterative steps, and that at the end of each step you gain some insight, which you then turn into action. Continuous testing, and continuous deployment significantly reduce the risks of major project failures previously associated with waterfall methodologies. Even with an entire data-center built on software defined infrastructure, without an easy way of testing new infrastructure builds, and fixing and correcting mistakes early, infrastructure operations will never be able to fully support the kinds of agility the business increasingly demands from IT. So long as internal IT lacks an effective undo-key, they will be stuck in the world of waterfall methodologies, and a cost effective, agile private cloud built on software defined principals will remain a future vision instead of a present day reality.

The nice thing from my perspective is that NetApp uniquely provides a well proven set of tools that provides the fine grained undo that works from a single document on a home drive, all the way up to a petabyte scale data-center. We provide a Control-Z that lets you innovate safely, and realize the benefits of private cloud on technology that is already in production in thousands of data centers.

Future blog posts will concentrate on specific technologies like Snapmirror, SnapCreator, and NetApp Shift and how they create and enable a Universal Data Platform that can be used to eliminate the risk that stands between where virtualization stands today, and a truly agile, hybrid cloud tomorrow.

BYOD – A Practical Approach

The major challenges with Bring Your Own Device (BYOD) are perhaps less about technology than they are about changing the mindset of IT departments in the face of the ongoing consumerisation of technology. Since the advent of personal computing in the 80’s, there has been a gradual shift to IT being a set of tools that surrounded an empowered user. However, the fact of the matter is that most of the IT that has been available simply wasn’t consumer ready. It was complex, expensive to own, insecure and impossible to manage. In the end, centralised IT was asked to take on the burden of fixing the mess.

The major challenges with Bring Your Own Device (BYOD) are perhaps less about technology than they are about changing the mindset of IT departments in the face of the ongoing consumerisation of technology. Since the advent of personal computing in the 80’s, there has been a gradual shift to IT being a set of tools that surrounded an empowered user. However, the fact of the matter is that most of the IT that has been available simply wasn’t consumer ready. It was complex, expensive to own, insecure and impossible to manage. In the end, centralised IT was asked to take on the burden of fixing the mess.

The introduction of truly consumer ready devices and services has seen the realisation of the idea of the empowered user. Where the likes of IBM, Oracle and Microsoft were the biggest players in the first wave of IT, it is companies like Apple, Amazon, and Google who have been the dominant players in this second wave by creating an ecosystem of personal technology that is easy enough for a child to use at a price point that almost everyone can afford. The excitement generated by these new technologies means people want to use these new tools not only at home, but everywhere.

The fact that people are now prepared to pay for their own technology in exchange for the ability to have a choice in what they use hasn’t been lost on those who are asked to fund upgrades for ageing corporate infrastructure. The result is that BYOD is now firmly on most IT department’s radar. However, the people who want to BYOD don’t want to use it simply as a way of lowering IT’s overheads, they want it to continue being a tool that allows them to consume services that enable them to do their job better. They want their internal IT to give them the service levels they’ve grown used to, they want immediacy, and they want it now.

For IT departments to take advantage of the benefits of consumer IT and BYOD, they must begin to shift their focus away from being custodians of technology towards being a provider of a service. The delivery of this service-centric IT may in fact provide the necessary political and budget justification for creating a new IT foundation built on an agile data infrastructure. To ease the transition to BYOD, IT departments should take a four-phase approach.

- Define your policy

Publish a service catalogue of what applications and services can be consumed from tablets and smartphones from within the corporate environment, while simultaneously setting expectations around the use of public cloud technologies for sensitive data - Focus on quick wins

Deploy virtual desktop infrastructure (VDI) for laptop users. This is something that can deliver a reasonably quick win, allows a broad range of end user devices to be brought into the corporate environment, and elegantly solves a number of security and supportability problems - Continue the dialogue

Establish an effective two way communication mechanism that allows users and IT to work together to prioritise which services need to be developed and deployed in-house, and which may need to be blocked or uninstalled - Develop the total solution

Expand on, or integrate the initial VDI deployment into a larger Infrastructure as a Service offering that will form the foundation for Software and Storage as a Service offerings. These will fulfil much broader demands and allow the BYOD strategy to be completely successful

By focusing on quick wins, effective two way communication, and building an agile foundation for the future, BYOD efforts can be the catalyst for building a truly service driven IT department.

The four principals of Private Cloud – Part 2 – Service Analytics

While defining and publishing a set of IT services that meets or even exceeds the expectations of your end user to help them improve the agility of the business is the first step in delivering IT as a Service, we have to be able to make sure that we don’t over-promise and under-deliver.

While defining and publishing a set of IT services that meets or even exceeds the expectations of your end user to help them improve the agility of the business is the first step in delivering IT as a Service, we have to be able to make sure that we don’t over-promise and under-deliver.

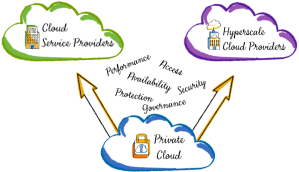

In order to keep our promises, the IT governance disciplines we have developed over the years around capacity planning, and infrastructure design and deployment remain important but are transformed as we focus more on service optimisation. The luxury that we had in the past of assuring SLA conformance by over engineering and over-provisioning within an application silo is no longer affordable. More to the point this siloed mentality often leads to a lack of standardisation across the different silos which makes subsequent automation incredibly difficult. On the other hand in our drive for lower costs and greater agility, we cant sacrifice the security, reliability and failure isolation that siloed infrastructure gave us, and which IT infrastructure professionals care so deeply about. The kinds of shared virtualised infrastructures that cloud computing is built on must not only give us greater efficiency and flexibility into our environment, it must at the same time give us the ability to increase our level of management and oversight, and improve our ability to take corrective action if it is required.

The old adage that “You cant manage what you cant measure”, still holds true, but the trouble is that measurement in highly virtualised IT infrastructures presents a number of challenges. In larger IT organisations no one group holds all the information or expertise to troubleshoot performance problems, or to identify which resources are being consumed by a given user or application. We cant assume that because that, for example, the network, compute and storage resources are all meeting their SLA targets that the end user experience is also acceptable or meeting the SLA offered in the service catalog. Having team leaders sit in a “Come to Jesus” meeting with their arms folded saying things ike “It cant be the storage teams fault, we bought the most expensive frame array in existence and over-engineered the hell out of it, it must be a problem in the network” simply doesnt cut it in a world where service levels are king and nobody cares who’s fault it is, or which widget is currently fubar.

The bestway to address this is to make appropriate investments in the tools and processes that goes beyond simple monitoring. These tools need to enable IT staff to do things like identify service paths and confirm the redundancy of those paths, set policies on service paths for accessibility, performance, and availability, intelligently analyzing logs and other data to ensure and report on whether policies are are being adhered to, and and provide capacity planning forecast intelligence to allow optimal use of resources and implement and leverage just in time purchasing processes.

By building a solid Service Analytics capability, you can immediately improve overall governance and lay the foundations for successfully managing and optimising private cloud services via

- Consumption-based metering

- Dynamic capacity optimization.

- Reduced management and resource costs.

- Provenance and Audit-ability of SLA conformance

The four principals of Private Cloud – Part 1 – Service Catalogs

Over the last few years NetApp in ANZ has helped a number of companies deploy infrastructure that allows them to begin providing IT as a Service and we’ve found that success comes from focusing on four fundamentals building blocks. A service catalog, service analytics, service automation all of which provide a solid foundation for what the users see, which is ultimately self service

Over the last few years NetApp in ANZ has helped a number of companies deploy infrastructure that allows them to begin providing IT as a Service and we’ve found that success comes from focusing on four fundamentals building blocks. A service catalog, service analytics, service automation all of which provide a solid foundation for what the users see, which is ultimately self service

- A Service catalog defines your services with well-defined policies that help you automatically map service levels to infrastructure attributes

- Service analytics helps you to optimize your services with centralized monitoring, metering, and chargeback to enhance visibility and both cost and SLA management

- Automation helps you to rapidly deploy your services by integrating and automating provisioning, protection, and operational processes

- Self-service empowers IT and your end users by enabling service requests to be fulfilled through a self-service portal

The experience NetApp has had in helping our customers like SunCorp,and ING bank make this transition successfully has shown us that that the first indispensable step in moving beyond siloed infrastructure optimisation towards end to end infrastructure and service optimisation is to create an actionable, and automatable internal IT service catalogue.

In a traditional ITSM/ITIL model, the service catalogue acts as the focal point for interaction between IT and the business. It defines a set of discrete IT offerings that the business can request in order to service its own customers. These items include the kind of applications, the availability, performance, and resilience that the business or the end user requires, how to get access to it and how much it costs. A good public example of this can be found here

However when you are looking at providing Infrastructure or Platforms as a service (IaaS/PaaS), your consumer isn’t the business. The primary consumers of these services will probably be internal IT staff. This might include members of the IT infrastructure team teams who need a complete set of resources such as virtual machines and network links to test new management software, or more critically, they may be business solution architects and application developers.

These are the people who are under extreme pressure from the business to develop and deliver new functionality that allows the business to be more competitive. For them, time to market and speed of development are the primary drivers. Money can often be found, especially from OpEx budgets, but time is irreplaceable. They may not know exactly what they need, but they know they need it NOW, and many of them are looking to Amazon and Google to give them their IT resources right now and on-demand.

Change control, stability, security, performance, data protection aren’t top of mind, and the service catalogues provided by the major cloud providers may be “good enough” in their eyes because it gives them the one thing they need the most: it gives them immediacy, agility and time, or in other words, their service catalogues turns time into money.

While this need for speed is understandable, it risks creating a new kinds of uncontrolled and unsecured environment that introduces considerable unmanaged risks for the business. For this reason, IT shops looking to begin the journey towards fully automated self-service provisioning should make the first item on their internal IT service catalogue the ability to rapidly stand up standardised test/dev environments.

Too many ideas not enough time.

A couple of weeks ago I went to an internal NetApp event called Foresight where our field technology experts from around the world meet with our technical directors, product managers and senior executives. A lot of the time is spent talking about recent developments that the field is seeing develop into new trends and how that intersects with current technology roadmaps. We get to see new stuff that’s in development and generally get to spend about a week thinking and talking about futures. The cool thing about this is that while the day to day work of helping clients solve their pressing problems means that we often don’t get to see the forest for the trees, this kind of event lifts us high above the forest canopy to see a much broader picture.

A couple of weeks ago I went to an internal NetApp event called Foresight where our field technology experts from around the world meet with our technical directors, product managers and senior executives. A lot of the time is spent talking about recent developments that the field is seeing develop into new trends and how that intersects with current technology roadmaps. We get to see new stuff that’s in development and generally get to spend about a week thinking and talking about futures. The cool thing about this is that while the day to day work of helping clients solve their pressing problems means that we often don’t get to see the forest for the trees, this kind of event lifts us high above the forest canopy to see a much broader picture.