Archive

Software Defined .. Why Now ? Why Bother ? …

Monday is my admin clean-up and research day, which makes it the best day for quadrant-II thinking, and most of what I’ve been thinking about recently is software defined storage, or if you’re an Openstack advocate then you’d call it software-led storage.

After spending more than a few weeks thinking and researching, I’ve come to the conclusion that I’m not a big fan of either term, especially as it pertains to storage. Given the likelihood of an increasingly fuzzy set of layers between hardware and software, I think that “software-led” is probably a more useful way of talking about the future of storage infrastructure, but even so I’m still not convinced it’s the most useful description either. Nonetheless, for the moment a lot of people are talking abut software-defined networks, datacenters and storage, so I’ll start to outline my breakdown of storage within that paradigm.

Software defined anything has its roots in software defined networking and OpenFlow, so the rest of this post goes through how I see Software Defined Networking, and then I’ll use that as a framework in future posts for talking about software defined storage.

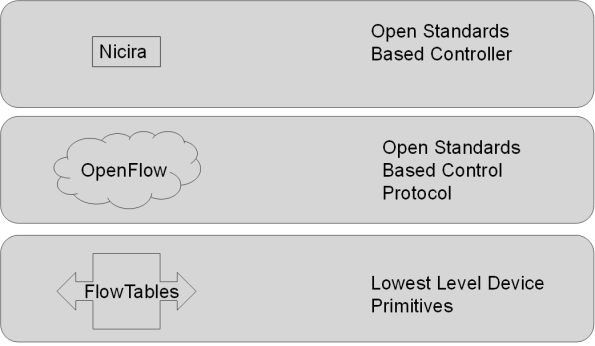

So how do you define “Software Defined” I think if you’re going to use the term without it being just another way of saying virtualised, then you need to be talking about infrastructure built on the principal of a clean separation of hardware optmised functions from software control structures, or , in the parlance of Software Defined Networking separating the data plane from the control plane. That means to create something that is truly Software Defined XXXX and not just a marketing-sexy-me-too-rebrand you have to

- identify and then formally define a set of common functions or primitives performed by existing infrastructure that are optimally run in purpose built devices (e.g. hardware filled with interfaces and ASICs) – This becomes the “Data Plane”

- Create a protocol that manages those functions

- Create a standards compliant controller that runs on general purpose hardware (e.g. an intel server, virtualised or otherwise) that takes higher order service requests from applications and translates those into the primitives codified in step 1, over the protocol devised in step 2. – This becomes the “Control Plane”

The prime example of this is with software defined networking world that could be something that looks like this …

So why did this happen in networking, and not storage or compute ? Why now ? And why bother ?

As to why it happened in networking, there are a half a bazillion blogs out there on the subject, of which I’ve only read a small fraction, but from my perspective, I reckon it happened because of the following reasons

- by its very nature, customers have demanded that networking vendors must inter-operate with other vendors equipment in as seamless a fashion as possible

- there has been one absolutely dominant player in the market at pretty much all times along with a very well supported standards body.

- Networking subsequently evolved to the point where there is one dominant layer-2 implementation (Ethernet) with one dominant layer-3 implementation (IP), and a fairly small number of upper level protocols above that (TCP/UDP/HTTP etc).

- This has driven the similarity of network equipment functionality from disparate vendors that allowed the developers of openflow the opportunity to identify the commonality of flow-tables in hardware on which the elegant separation of control and data planes in SDN is built.

Like many “new and revolutionary ideas”, it probably worth noting, that this revolutionary “new” architecture has been evolving since at least 2001 when the IETF started the “Forwarding and Control Element Separation” (ForCES) working group”, and arguably before than back to 1996 with things like General Switch Management Protocol (GSMP).

But even if you can do this clean separation, why bother ? The development of openflow wasn’t driven by market requirements, it was developed to let researchers run interesting experiments on existing large scale university campus networks. While that’s a very cool thing to do as a researcher, running “experiments” on a large scale enterprise infrastructure isn’t something I’ve ever had much success with. About as adventurous as I get is asking for a vlan that spans two datacentres, and for the most part whenever I’ve suggested stuff like that in the past, I get one of those “Put the network diagram down … and STEP AWAY” looks from the network guys. I can only imagine what would happen if I said “Hey I’ve got this really cool idea for encapsulating fibre channel over token ring and running it on your existing Ethernet infrastructure”. Which begs the question, why on earth would anyone in Enterprise-IT implement want to implement something this radical ?

The answer for the most part is .. they don’t. Sure there is a promise that opening up the infrastructure will lead to more competition and that will reduce prices, but the last time I looked, the networking industry was already pretty competitive. Even of you were to pull a datacentre class switch apart into cheap basic hardware and smart software running on an Intel-box, the value that vendors like Cisco bring in terms of scalability, quality assurance, interoperability testing, support, professional services etc, will mean that in all likelihood, customers will be willing to pay a premium for their solutions, and Cisco and others like them may become even more profitable as a result. As a parallel case, there are plenty of free database offerings out there, and yet Oracle is doing just fine. You might expect that if “Software Defined” was something everyone now uses as a prime buying criteria you might see Larry Ellison extolling the virtues of a “Software Defined Database”. OK, maybe not given his rather sceptical comments about cloud in the early days, but they’re going in exactly the opposite direction, increasingly embedding more of their software into vertically integrated hardware solutions precisely because there is continued ongoing demand from enterprise customers for tightly integrated hardware/software solutions.

So if SDN isnt likely to significantly reduce costs, and there isnt an organic pent up demand within the enterprise, then where is the payoff for the large risks that come with developing and deploying any new technology ?

The answer to that question lies in the standardisation and maturity of today’s network protocols that led to the commonality expressed in flow-tables. The core protocols of TCP/IP were developed almost forty years ago and were built not only on a set of solid principals that have stood the test of time, but also on what were in 1973, some very reasonable assumptions. Unfortunately some of those assumptions no longer hold true e.g. there was an assumption that a machine with an IP address wont magically teleport from one physical location to another, yet this is exactly what happens when you try to migrate a virtual machine from one datacenter to the next. It is exactly those kinds of assumptions that are now causing problems the largest consumers of Network equipment: the large-scale cloud and telecom service providers.

That is why Software Defined Networking is suddenly interesting. For many businesses, IT infrastructure isn’t a competitive differentiator (it could be, and it should be, but right now it isn’t), but there are some very large customers, with some very large IT budgets for whom IT infrastructure is a core enabler of their business, and are willing to take on the risk of a new approach in the promise of disruptive innovation. These people aren’t just dreamers with fists full of VC dollars, but some of the networking industries largest and most influential customers. Other agile enterprise customers who understand how to leverage IT infrastructure for competitive advantage will also benefit from the investments of these larger organisations, but for the most enterprise customers, what passes today for software defined Networking will be restricted to virtual switches inside their virtual server infrastructure, and that, while useful, doesn’t exactly fit the definition I used at the beginning of this post.

Which brings me to storage .. For a number of vendors, a Virtual Storage Array = Software Defined storage, and while that’s reasonably valid, I also think it’s a bit of a half measure. I’m not saying that because I don’t like VSA’s, I do, I think they’re great, but, I don’t think that they’re the best example of what a software defined storage infrastructure can do. They might be part of it, but they’re not a necessary part, and in some cases, I’d argue that they’re not even a desirable part of a cleanly separated software defined infrastructure. And that is what I’m going to cover in my next post.

Software Defined Networking – The Next Frontier

The following part of the post came from content I wrote for Evolve a newsletter we publish out of ANZ. It’s a a little long and technical for an executive focused newsletter, which is partly why it gets a little bit rushed in the end. What I’d like to do is to expand a little more on what I believe are the choices that can be made when separating the control and data planes in a software defined storage architecture, where the industry, and in-particular NetApp is today, where things are likely to go, and most importantly how to get value from this architectural shift.

Introduction

CIOs face the constant challenge of turning rapid technological developments into business advantage. If this was not difficult enough, there are often times when multiple technologies are simultaneously released into the market, changing the IT landscape. The datacentre is currently on the cusp of such a revolution.

As it was for workforce mobility and cloud computing, it is the network that will be at the centre of these transformations. A network connects resources to intelligence and allows us to redefine what a datacenter is, and how we consume its properties.

It isn’t just incredible speed and massive bandwidth that is causing this transformation, but the fruition of an idea that’s been in development for the last decade, and that idea is Software-Defined Networking or SDN.

Software Defined Networking

This disruptive trend in the networking industry rediscovers the old idea of separating the control and data planes in network equipment. In other words, SDN liberates the higher-level network management functions from their ties to individual boxes and instead offers the vision of a “network operating system”. This allows networked applications to provision and control their networking needs using high-level open programming interfaces provided by an SDN “network controller”. The promise of this approach has meant that in a few short years, Software-Defined Networking has turned from a simple idea meant to enable new academic networking research into a potentially industry-changing technology trend.

The reason for this is that the network virtualization technology that is part of SDN is the missing piece that completes the vision of a software-defined datacenter, where compute, network and storage resources are elastic and dynamically adaptable. This network virtualization not only completes this vision, it raises the bar on how the different virtualized components integrate and interact in new, direct and more dynamic ways. This changes what IT will expect from their storage infrastructure.

SDN and the implications for Storage

Infrastructure managers who see the promise of a software defined datacentre are beginning to see storage as an important part of the infrastructure they desire to manage within the context of an SDN. However, this is only possible if the storage infrastructure itself can be separated between software that controls and manages data, and the infrastructure that stores, copies and retrieves that data. In short, storage needs to have its own control and data planes, working seamlessly as an extension of the SDN infrastructure that will be the core of the next generation datacentre.

Part of the reason for wanting to separate the control plane and liberate the storage control software from the hardware is that software defined storage allows offloading the computationally heavy aspects of storage management related functions like RDMA protocol handling, advanced data lifecycle management, caching and compression. The availability of large amounts of CPU power within private and public clouds opens all kinds of possibilities to both network and storage management that were simply not feasible before.

With more intelligence built into the Control-Plane, storage architects are now able to take full advantage of the other two major changes in the Data-Plane. The first, and perhaps the most interesting is the increasing affordability of solid state memory such as Flash and post-Flash technology such as PCM and STT-RAM.

Optimising Performance

Phase Change Memory (PCM) and Spin-transfer torque random-access memory (STT-RAM), have the access speeds and byte addressable characteristics of the Dynamic RAM (DRAM) used in servers today, with the added and transformational benefit of the solid state persistence of Flash. These technologies are significantly more expensive than Flash is today, but the predictions are these technologies will surpass even the cheapest forms of Flash memory within five or six years. Regardless of which technology wins, the trends are clear; within a few years the majority of a server’s storage performance requirements will be served from some form of solid state storage within the server itself. When this is combined with new network technology and software like SAP HANA, it has major implications for storage design and implementation. Imagine how your infrastructure would change if every server had terabytes of super-fast solid state memory connected together via ultra-low latency intelligent networking. The fact is that many of the reasons we implement shared storage for mission critical applications today, would simply disappear.

Optimising Capacity

The second major change is the demand to store and process massive amounts of data that increases as we are able extract more value from that data through Big Data analysis. This coincides with a dramatic reduction in the cost of storing that data. Very high density SATA drives with capacities in excess of 10TB per disk are coming, but in order to surpass some hard quantum-physics level limitations they will use new storage techniques such as shingled writes and will be built optimally to store, but never overwrite or erase data. This means the storage characteristics at the Data-Plane will be fundamentally different from those we are familiar with today. Furthermore, even with these improvements in the costs and density of magnetic disk, the economics of power consumption and datacentre real-estate means that tape is becoming attractive again for long term archival storage. Finally, the economies of scale that large cloud providers have and the availability of massive computing power they are able to place in close proximity to that data means that those cloud providers will have a compelling value proposition for storing a large proportion of an organisation’s cold data.

Regardless of where and how this data is stored, the challenges of securing and finding that data, and managing the lifecycles of this massive amount of information means traditional methods of using files, folders and directories simply won’t be viable in the long term. New access and management techniques built on-top of object based access to data such as Amazon’s S3 and the open standards based CDMI interfaces will be the management and data access protocols of choice.

Conclusion

In the end the only way to effectively combine the speed and performance of solid-state storage with the scale and price advantages of capacity optimised storage is by using a software defined storage infrastructure. It is the intelligence of the separated Control-Plane powered by commodity CPU that allows infrastructure managers and datacentre architects to take advantage of these two massive trends.

While this all talks about what will happen in the future, unlike other vendors who are only just beginning to talk about building a software defined storage infrastructure, NetApp has been planning for this future for many years now.

- Clustered Data-ONTAP was built on the principal of separating the Data-Plane and the Control-Plane and is ready to take advantage of the trends in software defined networking as they evolve and are deployed into datacentres over the next few years

- NetApp’s fully supported ONTAP-Edge software runs in a virtual machine, allowing the full power of ONTAP’s advanced data management functionality on commodity DAS, and NetApp’s V-Series controllers performs the same function at extreme scale for the largest and most mission critical environments

- NetApp has released at no cost to the customer Flash-Accel technology that allows commodity SSD’s and 3rd Party PCI based Flash cards to act as an extension of our storage cache for virtualized environments. This extends the work we have done in our separation of control and data-planes for our existing customers who have not yet moved to Clustered Data ONTAP

- NetApp has partnered with Amazon to provide private storage for AWS which allows the massive on-demand compute power to be coupled with NetApp’s storage in Amazon’s datacenters

- NetApp already provides open standards based advanced programming and automation interfaces through offerings such as NetApp Workflow Automater, the Cloud Data Management Interface, SMI-S, and continues to lead the industry in providing programmable software defined storage. These aren’t just technology tick box items, but technology that drives significant competitive advantages such to companies like ING DIRECT’s “Bank in a Box”.

These are just a few of the things we’ve already done, the foundations have already been set and what NetApp will be building and bringing to the market over the next few years will truly redefine what storage is inside the datacenter, and the value it can bring to IT and the organisations it serves